//I tackled this challenge in a set of logical steps, looking for blobs that had no views, which had been deleted but not completely deleted. Using this in combination with hosts that had a dip in usage got to several suspect files one of which was the missing interview. Not elegant by any means but it got the job done.

let hosts=

StorageArchiveLogs

| parse EventText with TransactionType ” blob transaction: ‘” BlobURI “‘” *

| parse EventText with * “(” Reads:long “reads)” *

| extend Host = tostring(parse_url(BlobURI).Host)

| summarize Deletes=countif(TransactionType == ‘Delete’),

Creates =countif(TransactionType == ‘Create’),

Reads=sumif(Reads, TransactionType == ‘Read’) by Host

| where Deletes > 0

| where Creates > 0

| where Creates > Deletes

| order by Reads

| take 50;

let Scott =

StorageArchiveLogs

| parse EventText with TransactionType ” blob transaction: ‘” BlobURI “‘” *

| parse EventText with * “(” Reads:long “reads)” *

| extend Host = tostring(parse_url(BlobURI).Host)

| where Host in~ (hosts)

| make-series Count=sumif(Reads, TransactionType == ‘Read’) default=0 on Timestamp step 12h by Host

| project series_periods_validate(Count,14,10), Host, Count

| extend Score = tostring(series_periods_validate_Count_scores[0])

| where Score != “0.0”

| project Host;

let deletes=

StorageArchiveLogs

| parse EventText with TransactionType ” blob transaction:” *

| parse EventText with * “blob transaction: ‘” BlobURI “‘” *

| where EventText has “completely”

| distinct BlobURI;

let uri=

StorageArchiveLogs

| parse EventText with TransactionType ” blob transaction:” *

| parse EventText with “‘ read access (” ReadCount:long ” reads) were detected on the origin”

| parse EventText with * “blob transaction: ‘” BlobURI “‘” *

| extend Host = tostring(parse_url(BlobURI).Host)

| where Host in (Scott)

| project EventText, BlobURI, TransactionType, ReadCount, Host

| summarize Deletes=countif(TransactionType == ‘Delete’),

Creates =countif(TransactionType == ‘Create’),

Reads=sumif(ReadCount, TransactionType == ‘Read’) by Host, BlobURI

| sort by Reads asc

| where Deletes > 0

| where Creates > 0

| distinct BlobURI;

StorageArchiveLogs

| parse EventText with TransactionType ” blob transaction:” *

| parse EventText with * “blob transaction: ‘” BlobURI “‘” *

| where BlobURI in~ (uri) and BlobURI !in (deletes)

| where EventText has_all (“backup”,”create”)

| distinct EventText

| extend SplitAll=split(EventText, ‘ ‘)

| extend Backup=tostring(SplitAll[8])

| project-away EventText, SplitAll

| where Backup contains “mp4”

//A far neater approach by my colleague Nabeel Prior

// get the blob accounts that had 1 new creation each week for 4 weeks

let weeklyCreates =

StorageArchiveLogs

| where EventText startswith “Create blob”

| project

createTS=Timestamp,

blobAccount=substring(EventText, indexof(EventText, “https://”), indexof(EventText, “/”, 1, 1000, 3) – indexof(EventText, “https://”))

| summarize accountCreatesPerWeek = count() by blobAccount, week_of_year(createTS)

| where accountCreatesPerWeek == 1

| summarize count() by blobAccount

| where count_ >= 4;

// get all the creates for the above blob accounts

let allCreates =

StorageArchiveLogs

| where EventText startswith “Create blob”

| project

blobAccount=substring(EventText, indexof(EventText, “https://”), indexof(EventText, “/”, 1, 1000, 3) – indexof(EventText, “https://”)),

createUrl=substring(EventText, indexof(EventText, “https://”), indexof(EventText, “‘ backup is created on “) – indexof(EventText, “https://”)),

backupUrl=tostring(split(replace_string(EventText, “Create blob transaction: ‘”, “”), “‘ backup is created on “, 1))

| join kind=inner weeklyCreates on $left.blobAccount == $right.blobAccount;

// for all creates above, find those that were partially deleted

StorageArchiveLogs

| where EventText startswith “Delete blob”

| extend deleteUrl=substring(EventText, indexof(EventText, “https://”), indexof(EventText, “‘ backup is”) – indexof(EventText, “https://”))

| extend deleteAction=iif(EventText contains “completely”, 1, 0)

| join kind=inner allCreates on $left.deleteUrl == $right.createUrl

| summarize completelyDeleted=sum(deleteAction), firstDelete=min(Timestamp), lastDelete=max(Timestamp), numberOfDeletes=countif(deleteAction == 0) by deleteUrl, backupUrl

| where completelyDeleted==0;

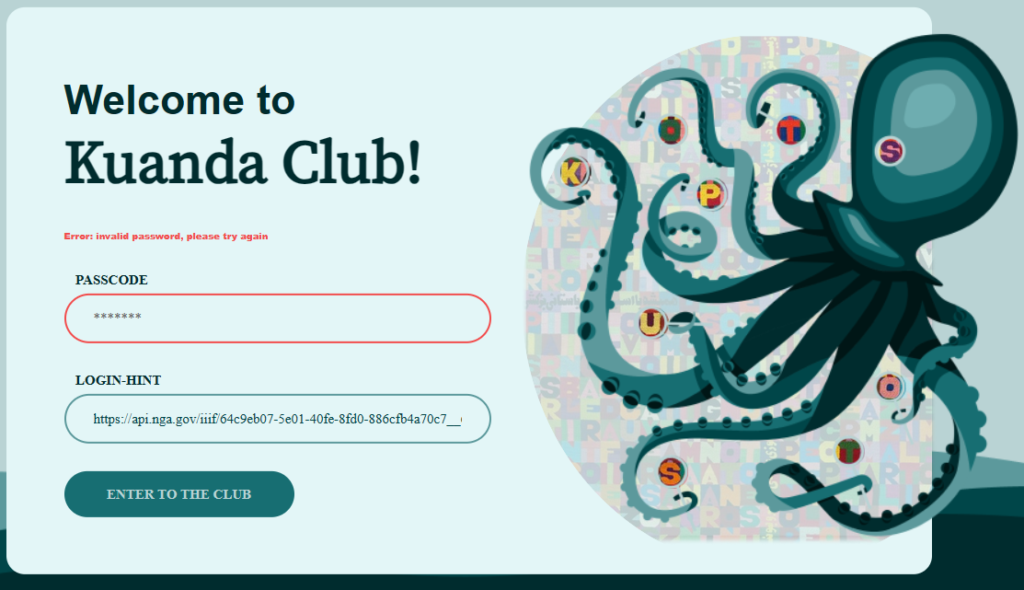

![]()