Welcome back detectives, to a new exciting season of Kusto Detective Agency, this time around there are 10 cases to solve and some new tools to help you sharpen those KQL skills!

What is it?

The Kusto Detective Agency is a set of challenges that is designed to help you learn the Kusto Query Language (KQL), which is the language used by several Azure services including Azure Monitor, Sentinel, M365 Defender and Azure Data Explorer (ADX) to name a few. The challenges are gamified and interactive and consist different exciting cases across two seasons.

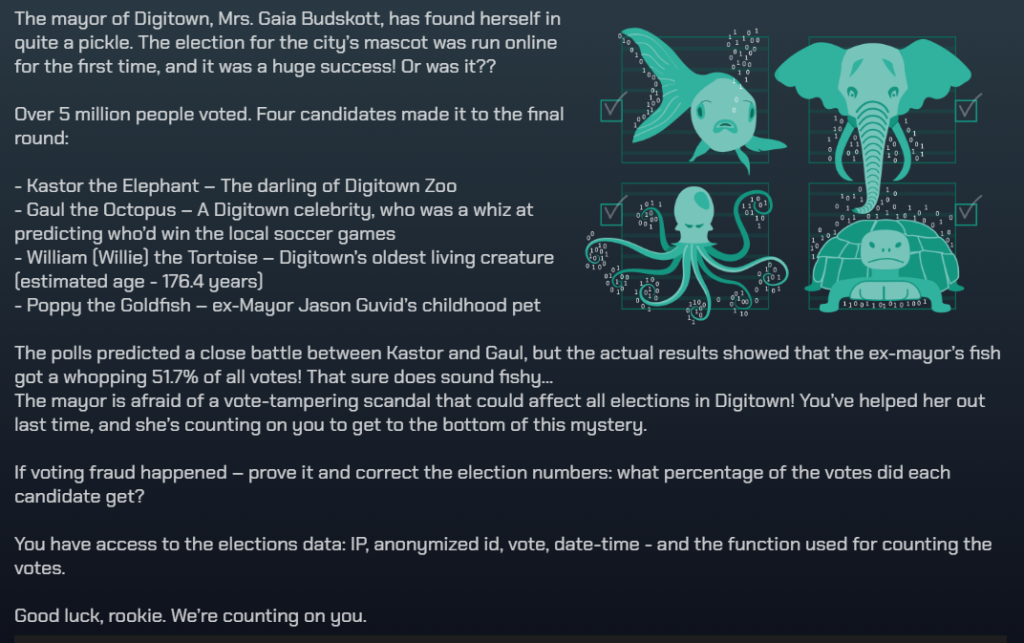

Each case has a different scenario that you need to solve using KQL queries, where you can earn badges, and they get progressively more difficult as you help the citizens of Digitown.

Season 1 is still available, and I talk about my experience with those challenges here.

Where can I get started?

It’s easy to get started just creating your free ADX cluster and report for duty at the detective agency!

Access the challenges here – https://detective.kusto.io/

Create your free ADX cluster here – https://aka.ms/kustofree

What’s new?

Hints return from season 1 but the new and exciting feature is a set of training that you can complete to prepare you for each case. This highlights specific commands and techniques that are relevant to solving the various puzzles. Just click “Train me for the case to get started”.

My thoughts

KQL is very valuable considering all of the products that make use of the language and being able to write a basic query does make working with those products much easier. Learning in this gamified way also makes the process more interesting and if the cases from season 2 are anything like season 1 we’re in for a lot of fun. I will be documenting my experience with season 2 and would highly recommend the Kusto detective Agency for anyone who could benefit from KQL skills.

![]()